Website Availability Test

Performing a comprehensive website performance check is essential for ensuring that users can access your site without any issues. While a complete loss of access to a website is relatively rare, it can signal serious underlying problems that might result in the site being removed from search engine results altogether. This analysis is also crucial for identifying potential security threats, such as hacking attempts or the presence of malicious software on your website.

Key Sections of the Website Availability Report

The website availability report is structured into several important sections that provide insights into the performance and reliability of your site.

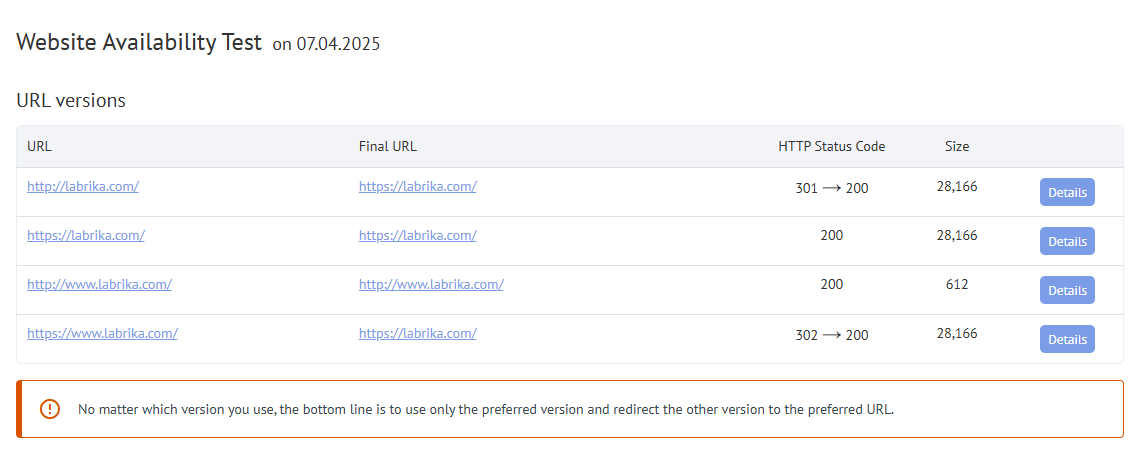

Variations in Domain Spelling

Occasionally, a website may redirect users from multiple URLs. If there are different variations of the domain name, it is advisable to consolidate these into a single, correct URL. Ideally, your website should support the HTTPS protocol, and all variations should redirect to this singular address.

If your website can be accessed through various URLs without proper redirection (for instance, as http://www.example.com and https://www.example.com), it can create numerous issues. Search engines may index some pages with "www" and others without it, leading to confusion. In one search engine, the site might be indexed as http://, while in another, it may appear as https://. To prevent this chaos, it is essential to configure domain redirection correctly in the .htaccess file.

Accessibility Testing with User-Agent

The term "user agent" refers to applications that utilize specific network protocols, typically those that access websites, such as browsers and search bots. If your website intentionally blocks access for certain user agents or displays an error, it is crucial to investigate the cause. Understanding whether this behavior is acceptable and why it occurs is vital. Otherwise, you risk losing a significant number of users and potential customers without even realizing it.

If the server response for a search engine differs from that shown to standard browsers, it may indicate the presence of cloaking on the website. Significant discrepancies can lead to penalties from search engines, potentially downgrading the site's ranking due to cloaking, even if it was implemented unintentionally or for legitimate reasons. Therefore, analyzing these results is crucial.

Suspicion of Cloaking

Cloaking is an unethical practice where different content is presented to users compared to what search engines see on the same page. All major search engines penalize this tactic by lowering the site's rank in search results, potentially leading to complete exclusion from indexing.

The cause of cloaking can stem from incorrect software configurations or hacking incidents. For instance, there have been cases where malicious code was found on websites, generating links to other resources in a way that only search engines could detect. This allowed attackers to gain backlinks while concealing their actions for an extended period, resulting in a steady decline in the hacked website's search rankings. If you suspect cloaking, it is essential to compare the saved results of requests with the normal state of the page. If malicious code is detected, consult specialists for remediation. If the behavior is a result of your software, investigate the underlying issues and rectify them.

Different Responses for Mobile Browsers

When accessing a website through mobile browsers, you may encounter different responses if a separate mobile version exists or if the site has been compromised and contains malicious redirects. Although the latter scenario is less common, it can severely damage the site's ranking and reputation. If you are aware that there is no distinct mobile version of your website, it is essential to analyze the server's response. Check for any signs of malware, harmful scripts, or inappropriate content. Hackers often exploit this method to prolong the detection of their activities, as mobile devices typically lack robust antivirus protection and website owners may not frequently check mobile versions. It is not uncommon for website monitoring tools to discover traces of hacking on numerous sites each month. Regular checks can help you identify and address such issues before they escalate into more significant problems, thereby ensuring the security and availability of your site.

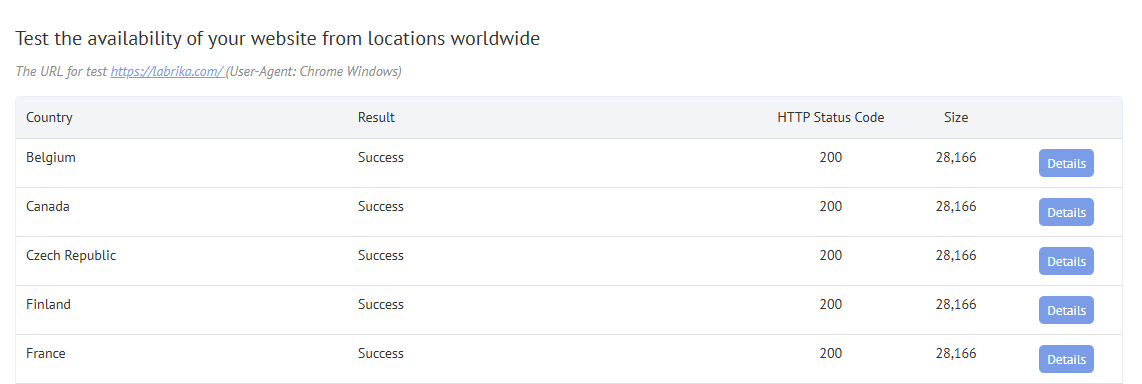

Testing Accessibility from Different Countries

The report should include analysis results regarding accessibility from various countries. Sometimes, traffic from specific regions may be blocked to mitigate threats during a DDoS attack. However, if such blocking is permanent, it can lead to significant traffic loss, as users traveling or accessing the site through proxies or VPNs from those blocked countries may be unable to connect.

To ensure that your website remains accessible globally, it is crucial to regularly check website availability from different locations. Utilizing tools like an online availability checker can help you monitor how your site performs across various regions, ensuring that users everywhere can access your content without issues.

Website Performance Check

A comprehensive website performance check is essential for maintaining an optimal user experience and retaining search engine visibility. This process involves evaluating several critical metrics, including loading speed, responsiveness, and overall site health. By conducting regular website health checks, you can identify potential issues before they escalate into significant problems, ensuring your website remains in top condition.

Performance monitoring tools can help you track various aspects of your website's functionality, including server uptime and response times. Keeping an eye on these metrics allows you to make informed decisions about improvements and optimizations needed to enhance user experience. Furthermore, uptime testing tools can alert you to any downtime incidents, enabling you to address issues promptly and minimize disruption.

Server Uptime Report

Server uptime reports provide valuable insights into the reliability of your hosting service. These reports typically detail the percentage of time the server has been operational versus down, allowing you to assess the effectiveness of your website uptime monitoring efforts. By analyzing these reports, you can identify patterns in downtime and take proactive measures to improve server reliability.

For businesses that rely heavily on their online presence, maintaining high uptime is critical. Frequent downtimes not only affect user experience but can also lead to lost revenue and diminished trust among your audience. Therefore, it is essential to implement robust website status monitoring solutions that provide real-time alerts about any issues, allowing you to respond quickly and effectively.

Importance of Continuous Monitoring

Continuous website status monitoring is crucial for maintaining a reliable online presence. By using automated monitoring solutions, you can receive instant alerts regarding any downtime or performance issues, enabling you to address them immediately. This proactive approach not only enhances user experience but also protects your site's reputation and search engine rankings.

Regularly checking your website's performance and availability helps ensure that you can deliver a seamless experience to your users. Whether through a website downtime checker or performance testing tools, staying informed about your website's status is essential for long-term success in the digital landscape.

Conclusion

In conclusion, conducting a thorough analysis of website accessibility and performance is essential for ensuring a seamless user experience and maintaining visibility in search engine results. By regularly checking website availability, monitoring server uptime, and performing comprehensive website health checks, you can proactively address potential issues and optimize your site for success.

Utilizing tools such as online availability checkers and uptime testing tools will help you stay informed about your website's performance and ensure it meets the needs of your users. Remember, a well-maintained website is crucial for achieving long-term success in the digital landscape. Regular checks and monitoring are not just best practices; they are essential strategies for maintaining the integrity and reliability of your online presence.