Not Responding pages

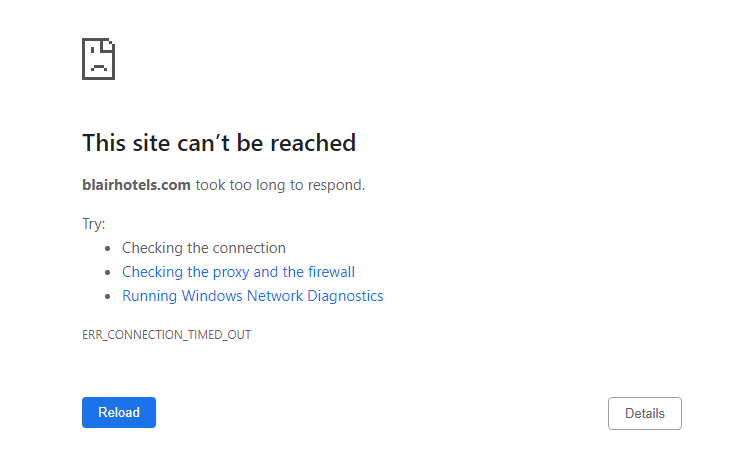

"Not Responding" pages are pages that did not receive a response from the server when requested.

The "Not Responding pages" error can be displayed, for example, when a web page does not load correctly or if a response timed out.

Web browsing uses the HTTP communication protocol

Every HTTP interaction includes a request and a response. A server connection timeout means that the server has taken too long to respond to a request for data.

Timeouts are not a response message. They appear when there is no response and the request is not completed within a predetermined period.

Why do some pages become unresponsive?

Site pages can stop responding for various reasons:

- Problems or malfunctions on the servers where the site is hosted. For example; equipment breakdown, network outage, maintenance work being undertaken, or any other flaws in the hosting.

- Server overload due to the current hosting limits of the site. For example; with high traffic, there may be insufficient channel bandwidth or even physical capacity of the server. If this is the case, the site will only partially show its content. This often happens during peak hours, but can also occur when search robots are actively indexing the site.

- With shared hosting on a shared server, one of the other hosted web resources could receive so much traffic that, as a result, other sites experience performance issues.

- Another cause of server overloads can be DDoS attacks. This is when a hacker generates a massive number of simultaneous requests to a site. Often these attacks are done by competitors.

- Technical problems can also cause unresponsive pages. Such as:

- Unoptimized scripts.

- A server located very far away in another country.

- The presence of confusing redirect chains.

- An incorrectly configured .htaccess file (this is what allows you to manage the operation of the webserver and site settings).

- The presence of viruses on the site that interferes with its functioning.

- Errors in the code.

- Pages that are too large.

- The issue of a slow wait time for a response from the server is a common characteristic of slow sites. This can affect both the entire web resource or single pages deep within the site. In this case, the speed of the site directly influences its availability. Slow web pages will be inaccessible for users who don't want to wait several minutes for a site's server to respond, and for search robots who do not wait for a response from the server for more than 30 seconds.

- If hosting services were not paid on time, the domain name was not renewed, or the SSL certificate was not renewed.

Why is it important to know about inaccessible pages?

Slow loading of pages, a complete failure of a website, or when individual pages are unavailable leads to loss of traffic and potential customers. They may then go to competitors' sites for a better experience. Leading to a loss in the bottom line for you as the business owner. Not only this but the issue is compounded when your SEO indicators, and therefore position in the search rankings decrease also.

The availability of a site and the speed of the server's response have a direct effect on the site's position in the search results, and its indexing. The longer the wait for a response from the server, the more difficult it becomes for a site to be indexed by the search robots. This leads to only partial indexing of a web resource.

This then also affects the speed with which new updates to pages are indexed by the bots. Instead of days or weeks, it may now take months for any useful changes you make to your pages to actually be indexed by the search engines.

Essentially search engines will not place slow, or unresponsive sites in high positions in the search results. Ultimately if any pages take too long to respond (30 seconds plus) they may be removed from the search results entirely.

Frequent interruptions in the site's functionality and the deterioration in the availability of pages can signal deeper issues. This could be broken equipment, attacks on the server, etc.

This is why checking for inaccessible pages is so important. You can avoid a deterioration in rankings, visitor churn (which leads to SEO issues), and a decrease in sales by checking for these types of unresponsive pages and then eliminating them.

How to find inaccessible pages

It is physically impossible to manually reload every site page every minute to monitor that it's working around the clock. Therefore, the process has to be automated.

Here at Labrika, we display pages that did not respond from the server when requested in the "Not Responding pages report".

Google Recommendations

Google's search quality team recommends that a site's status be labelled appropriately to ensure that a site's unavailability for technical reasons does not negatively impact a site's overall reputation.

Your best bet is to return status code 503 (Service Unavailable). This informs the search robot that the server is temporarily unable to process requests for technical reasons (maintenance, overload, etc.).

In this case, you can provide visitors and bots with information about when the website will resume functionality. If you know the length of the downtime in seconds or the estimated date and time of its end, they can be specified in the "Retry-After header" field. Googlebot uses this to determine the right time to re-index the URL.

Example Answer 503:

header('HTTP/1.1 503 Service Temporarily Unavailable');

header('Retry-After: Sat, 8 Oct 2011 18:27:00 GMT');

It is good practice to return a 503-status code for:

- Server errors

- Site unavailability

- Service or redesign closures

- Traffic overload

- Stub pages.

If unavailable, you should change the site's DNS to point to a temporary server that returns a 503 response.

However, it is essential not to treat the 503-status code as a permanent solution to the problem - prolonged 503 can be seen as a sign that the server has become permanently unavailable, as a result of which it can be removed from the Google index.

How do I fix the problem?

- Contact the web host that hosts your site and report the problem.

- Make sure you have a fresh backup copy of the site - in case of data loss due to any failure on the hosting side, you need to restore the site from the saved backups.

- If your site becomes unavailable regularly, you may need to move it to another, more reliable hosting service.

- The speed of a site's response to requests may depend on the load (server or channel) due to traffic. To solve this problem, you need to monitor the server response time and channel load.

- Returning timeouts (exceeding the server response timeout to a request) may be an indication that your site has "outgrown" its initial hosting package. It likely needs more resources for stable operation.

- For slow site issues, you can enable server caching or enable cloud caching for the site. This usually removes the problem of partial inaccessibility of the site due to the long server response time.

- Heavy pages can also be the cause of these types of errors. The most optimal solution to the problem, in this case, would be to optimize and reduce the content on the page. Read more about this in a separate article here on Labrika.

- If you often get 50X errors, it is most likely an issue in the site or server settings. In this case, you should contact the developers.

- Keep an eye on the validity period of certificates and domains by ensuring they are always renewed within a timely manner. Labrika monitors the validity of domain names by showing the date the domain was paid in a technical audit summary report. Our report also checks the expiration date of a site's SSL certificate and displays the SSL expiration date in the "Security" report.