SEO Cloaking Explained and How to Avoid Issues

SEO cloaking is a technique where the website shows different content to search engines than to normal website users.

It is a black hat technique used to fool search engines. The aim is to obtain higher rankings in the results page, or to redirect users to undesirable pages or websites.

What is SEO cloaking?

As we just mentioned, this is a black hat technique designed to show one type of content to visitors, and another to Googlebot and other search engine bots or spiders. This is known as cloaking, and is an attempt to fool the search engines bots into thinking the page has different content to what is actually shown.

Nowadays it is a massive breach of Google's quality guidelines. Any site found to be in breach will likely be downgraded in the rankings or be deindexed completely.

An example of cloaking may be; an infected website showing flash or video content to normal users, but text to search engines. The text content may be about home remodelling but the video may show porn.

Of course, this became a major problem for search engines. Users were dissatisfied with the misleading, poor user experience. And as we know Google wants to keep users coming back, so this was an issue that had to be fixed.

Why was cloaking used?

Originally it was a black hat SEO technique used to get a quick boost in the rankings. Nowadays it's a popular hacker technique. When hackers infiltrate a website, they can inject links and code into your web pages that are only seen by bots and not by normal visitors. Alternatively, they can redirect users to another website without the webmaster’s knowledge, basically stealing the website's traffic. Genuine website owners would never knowingly permit cloaking because the downside could be so severe if search engines detect it.

Is cloaking ever acceptable?

Using user’s data to return slightly different information is a perfectly legitimate technique. For example, with geolocation, when you want users from a different city, state or country to be served a different version of your site. This could be for language, currency, local advertising and so on.

Serving different versions of pages to mobile devices and desktops is also perfectly normal. It only becomes abnormal when different types of content are served to the search engine and user. This is when a website is likely to penalized as the intent is to deceive the search engine & the user.

How is cloaking done?

A user agent is the software used to access a website. Your browser is a user agent. A technique used frequently on Linux servers is to hijack the .htaccess file and insert code into the mod_rewrite module. This module has the capability of distinguishing normal visitors from search engine bots using the UserAgentName attribute of the {HTTP_USER_AGENT} value that Apache returns. It then simply serves up two different versions of a page's content - one for you and another totally different one to search engine bots. Another variation is to focus specifically on Googlebot’s IP address and serve up different content to it when it is detected.

The 6 most common methods for delivering cloaked content

Cloaking requires knowledge of programming but black hat tools and plugins are often used. The most common techniques are:

-

IP address detection

A user's IP address accompanies every user agent request sent to a web server. Systems can intercept this, and redirect the user request to any page they choose, on that website or any other.

-

User-agent interception

Your browser is one example of a user agent. Both spiders and crawlers are other examples. Basically, user agents are the mechanism that interacts with a website to retrieve data, such as web pages. Web servers can identify the type of user agent, and can serve up content accordingly.

-

JavaScript capability

User browsers are usually enabled for JavaScript, whilst search engine crawlers are not. This makes it easy to detect whether JS is enabled or not, and then to serve up different pages to the search engine bots.

-

HTTP Accept-language header testing

A user request ‘HTTP Accept-Language’ attribute informs the system when the user is a search engine. Then the cloaking logic simply serves a different web page.

-

HTTP_REFERER checking

Similarly, the ‘HTTP_REFERER’ header attribute of a user request reveals when a search engine crawler is the user agent. Thus, enabling different versions of web pages to be served.

-

Hiding text or links

While this is not like the other cloaking techniques in the technical sense, it is an attempt to manipulate search engines and is considered just as undesirable.

Examples are:

- Hiding text behind an image.

- Using a font size of zero.

- Making text foreground color the same as the background color (e.g. white text on a white background).

- Moving text off the screen through CSS and other non-technical methods.

How to check if your website is using SEO cloaking

Because hackers can penetrate a website and install cloaking code, you need to periodically check your website as part of webmaster Best Practices.

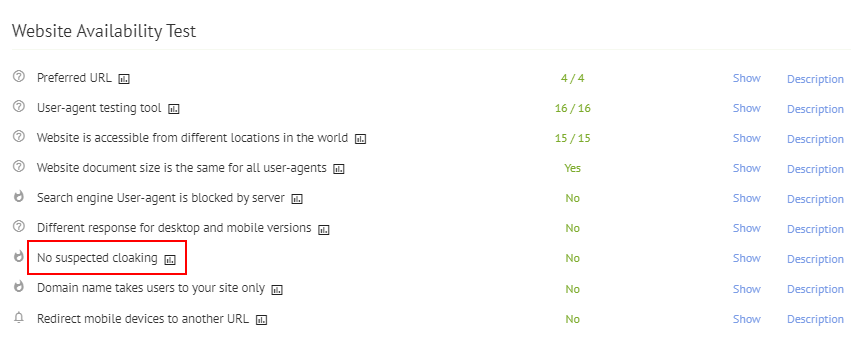

You can use Labrika's website availability test, found in the technical site audit. There will be a warning visible in this audit, if there are suspected cloaking issues on your website.

Alternatively, you can use the URL inspection tool in Google. This will show you how google views your pages, and you can then make any fixes to the page's content or code. A quick search for “website cloaking checker” reveals many free tools that can instantly perform a check. Alternatively, professional website maintenance services carry out these checks as part of a service package, giving you peace of mind. You can also set up hacking alerts from Google or in Google Search Console.

How to fix SEO cloaking issues

Once you know you have a cloaking issue on your website this may be the time to call in a professional. Knowing what to look for or how to fix it can be both laborious and technical. It is likely to require professional assistance to fix, and then to ensure there are no further issues. Cloaking issues require urgent attention as they can cost you rankings, and therefore money, very quickly. Checking for cloaking should become a regular part of your webmaster best practices going forward.

For ease, and peace of mind, this can be carried out in the Technical site audit > Website availability test with us here at Labrika.