Reduce Index Bloat and Improve Your Website's Search Visibility

What is index bloat?

Index bloat refers to websites with a high page count, that are 'bloated' with low value pages. These pages are then indexed by the search engines, negatively affecting your site's performance.

Why is index bloat bad for SEO?

The main issue is that index bloat means that the low value pages outweigh any high value pages. This means the search engines will view your site as low value. Even if you are putting in good effort on your high value pages, this will be outweighed by those low value pages.

Your primary aim with SEO is that a search engine's crawler is able to:

- Find the content you want it to find.

- Rank it well in the search results.

- Ignore content that you don't want indexed.

When a website has a high page count, but many of those pages are of low quality, it wastes your valuable crawl budget. This, of course, can then degrade the overall ranking of your site in the search engines. For this reason, it is an important element to keep an eye on regularly.

What are the causes of index bloat?

One of the main sufferers of index bloat are e-commerce sites, as they typically have many product pages. Even after products are no longer available, the URL and page may still be indexed. Product searches and filtering features can also cause hundreds or thousands of 'bloated' pages. There are also many other causes of index bloat, such as:

- Internal duplicate links and pagination.

- Tracking URLs that include a query string at the end.

- Auto-generated user profiles.

- Site development, migration and rebuilds also often leave behind useless test pages.

- Blog websites frequently generate archive pages such as monthly archives, blog tags, category tags and so on. Over time these build up into substantial bloat content.

- A badly ordered XML sitemap and internal linking. When a sitemap isn't properly thought out it can result in wasted crawl budget. After the crawler has crawled all of the pages on the site, it will then start following internal links resulting in a far higher page count.

- General low value content pages such as 'thank you' or testimonial pages. These would be considered low quality/ thin content, and shouldn't be indexed by search engine crawlers.

Essentially, every page listed by a search engine that does not give value to the user is index bloat. Some cannot be avoided, but the aim should be to minimize them as much as possible.

How to fix index bloat on your website

You really have two options:

- You delete the unwanted pages.

- You tell the search engines not to index them.

As simple as this sounds, it may take some time to do. It may also take some time for any positive results to show from your work. However, be assured, that over time this will pay off. To establish the pages that need to be removed you need to analyse the index rate of your website (ensuring to list the important pages that must be indexed). You must then cross compare this with the pages that Google has indexed. The excess is the index bloat that you want to get rid of.

You can start by targeting the low-hanging fruits. That is pages you can easily identify in your XML sitemap that shouldn't be there. Then remove them from your sitemap, and/or delete them if they no longer serve any purpose.

You can identify other offending pages in several ways:

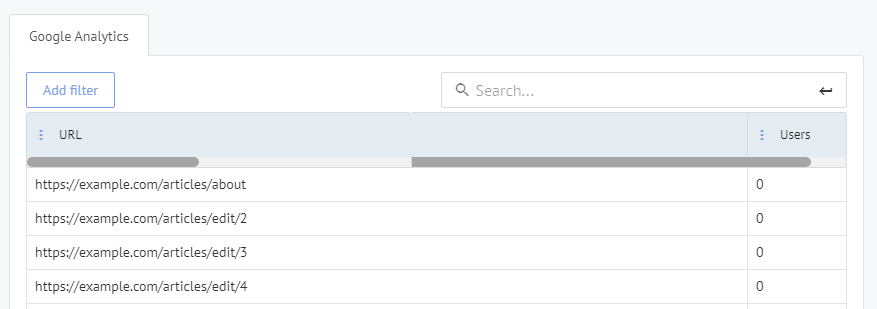

- Use an online service, such as Labrika, to identify them for you. You can do this via our ‘User behaviour data’ report, in the section ‘pages without any traffic’. This is likely the easiest option.

- Analyze your log files and find pages that users are visiting that perhaps you didn't know about, and other low-value pages. You may find some surprises!

- Check in Google search console for the 'index coverage report' which lists the pages that Google has indexed for your website.

You can also restrict access to content and prevent indexing by web crawlers

Whilst you can't prevent web crawlers from accessing a page, you can instruct them not to index it. Most search engines will obey this directive, but others may not, so this isn't a fool proof method.

If you have content that is truly confidential you would need more advance security features to block the web crawlers. One of these being the .htaccess file, this can control who sees what in individual folders. However, this is a complex, technical process, not to be undertaken by beginners!

4 easy ways to fix index bloat

- Delete duplicate pages, unwanted pages, old test pages and so on.

- Remove low-value pages from your XML sitemap and mark them with a noindex meta tag in the HTML

<head>section. This can be done like this:<head> <meta name="robots" content="noindex"> </head>

You can enter this manually or via a plugin such as Yoast on a Wordpress site.

- Set a disallow directive in your robots.txt file to indicate which folders or individual pages not to crawl. This content will then not be crawled, nor indexed by search engines.

User-agent: googlebot Disallow: /testimonials/ Disallow: /checkout/ Disallow: /content-page1.htm/

- Set a noindex directive in your robot.txt file. The pages will be crawled but not indexed by search engines.

Noindex: /content-page1.htm/

Do's and don’ts when fixing index bloat

- Do not allow internal search result pages (when a user uses the search bar on your site) to be crawled by search engines. Otherwise searchers may click on a link on the search engine results page but be directed to some other search result page on your website. This would provide a poor user experience.

- If proxy services generate URLs for your website, do not allow these to be crawled.

- Have a thorough SEO audit performed, either by a SEO specialist or by an online tool, such as us here at Labrika. Our user behaviour report allows you to view pages that have no traffic and are therefore likely ‘bloating’ your site.

Summary: finding and fixing index bloat

The primary objective of any search engine is to be the best at serving top quality results for its users. To achieve this, they deploy significant resources to identity and discard pages (or whole websites) that don't fulfil their criteria.

This is also a process that continues to be improved and refined. This means we, as SEO professionals and webmasters, must be doing our best to get ahead of these issues.

This type of technical SEO issue should become an important part of any website's quality review. Ensure that crawlers are only seeing the best of your content!

Carrying out the fixes we described above is a key step in optimizing your SEO efforts.